> log #11 — Midjourney: AB test your aesthetics

200 image comparisons later, I trained Midjourney on my aesthetics, from foggy coastlines to urban decay and citrus-scented cakes.

I unlocked my Midjourney V7 profile. Not a vibe, not a filter — a custom aesthetic fingerprint trained entirely from me clicking on pairs of images and saying “I like this one more.”

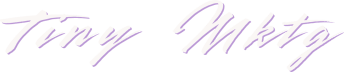

So what is this “profile”? And how does choosing between a kawaii garlic bottle and a moody 7-Eleven at dusk actually train an image model?

Let’s break it down.

What is a Midjourney Profile?

Your Midjourney profile is a latent vector—a bundle of learned aesthetic preferences that lives inside the V7 model and shapes how prompts get interpreted. When you unlock your profile, you get a personal --p code you can append to any prompt to guide it more toward your taste.

It doesn’t override the prompt. It steers the interpretation.

If you love film grain, muted palettes, and surreal lighting? Your profile might de-emphasize saturated cartoonish styles and favor cinematic compositions instead. Prefer stark minimalism? The model will lean away from busy textures.

You don’t set this manually. Midjourney learns it from how you vote.

How the Voting Works

To unlock your profile, Midjourney asks you to compare 200 image pairs. Each pair is a mini A/B test generated by the model with controlled variation:

One might be ultra-polished, the other rough and expressive

One might have cool tones, the other warm

One might use wide angle, the other a portrait crop

Your only job: Pick which one you like more.

These are not trivial differences. You’re teaching the model your gut-level aesthetic preferences — and because the source images were generated from known prompts and known parameter configurations, your selections provide valuable training signals.

Under the Hood: Preference Modeling

Here’s what’s actually happening:

Each image shown is tied to its prompt + latent noise + seed + settings.

Your choices are interpreted as “I prefer image A over image B given the same base prompt context.”

This creates a ranking function for your taste, modeled as a latent preference vector.

The model fine-tunes a subset of its output pipeline to bias future renders toward that vector when you use your profile code.

This is called pairwise comparison learning or ordinal preference learning, and it’s a staple of human-in-the-loop model alignment. Midjourney doesn’t need to know why you liked an image—just that you liked it more.

Think of it as training a personalized aesthetic model with 200 comparative data points.

What Changes With a Profile?

After unlocking, your profile code (--p [your-profile-id]) can be added to any prompt. It doesn’t change the structure or rules of the prompt. But it gently biases the output toward your known preferences.

That might mean:

Slightly altered composition choices

More of the color tones you favor

Better alignment with your desired visual language (e.g., painterly vs photoreal)

It’s not deterministic. It’s directional.

You’re not telling Midjourney what to do. You’re showing it what you gravitate toward—and letting it handle the rest.

🧪 Style Tests: With vs. Without My Profile

After unlocking my profile, I wanted to see what actually changed. Did my aesthetic fingerprint really shift the outputs? Or was the difference just placebo and pixie dust?

To test it, I ran the same prompts twice: once with my profile code (--p 3e0b352c...) and once without. The goal wasn’t realism vs. fantasy—it was taste. Did the images get moodier, hazier, more Tumblr-coded? Did my years of soft-blur voting bias the lighting? Would food get glowier? I chose three prompts—a foggy NorCal coastline, a quiet town at dusk, and a styled olive oil cake—to find out.

Let’s see what my model learned about me.

🧊 Prompt 01: Cold Coastal Gaze

A foggy Northern California coastline at dawn — cliffs covered in wind-bent cypress trees, soft light breaking through the marine layer, lomography style, dreamy blur, faint sun flare through salt mist — vintage film look, muted tones, Tumblr aesthetic — overcast sky, still water — shot on expired 35mm film --ar 16:9 --v 7

→ Test with and without --p 3e0b352c-3a9c-409f-81d3-4d19a8d3145c

🌇 Prompt 02: Urban Nostalgia Loop

A quiet side street in a small coastal town — teal and rust-colored storefronts, empty sidewalks, dusk light glowing through fog, telephone wires crisscrossing the sky — lomography style, Tumblr aesthetic, slightly surreal — visible light grain, washed-out colors, film bloom — no people --ar 16:9 --v 7

→ Test with and without --p 3e0b352c-3a9c-409f-81d3-4d19a8d3145c

🍰 Prompt 03: Soft Food Still: Olive Oil Cake

A golden olive oil cake sliced on a rustic wood table, natural daylight from the side, soft shadows, lemon zest and thyme scattered on top, ceramic plate, wrinkled linen napkin — slightly imperfect, natural styling — soft focus, shallow depth of field — realistic food photography, subtle light haze --ar 16:9 --v 7

→ Test with and without --p 3e0b352c-3a9c-409f-81d3-4d19a8d3145c

📝 Note: this one will reveal how food-aware your profile is vs default realism

🔚 Verdict: The Profile Works—Quietly

After running the side-by-side tests, one thing became clear: the profile didn’t make my generations radically different—it made them recognizably mine.

In every pair, the personal images (right side, lime outline) leaned closer to the aesthetic I would have crafted manually: softer light, deeper haze, more emotional tone. They felt more Tumblr. More me. The difference wasn’t loud, but it was unmistakable—like when someone adjusts the EQ just right on a song you already liked.

If I had to pick just one image from each set of eight, I’d 100% pick from the personal outputs every time.

That’s not magic. That’s a reinforcement-trained aesthetic vector nudging Midjourney toward what I consistently told it I loved.

And now that it knows what I like? I’m never going back.

Where Else Is This Used?

The technique Midjourney uses to train your taste profile—pairwise comparison learning—is everywhere in modern AI. Whenever a system asks you to choose “Which of these is better?”, it’s learning not from labels, but from your preferences.

Here are a few major examples:

🧑⚖️ 1. ChatGPT & Reinforcement Learning from Human Feedback (RLHF)

OpenAI fine-tuned ChatGPT using preference rankings from human labelers. Given multiple outputs for the same prompt, labelers ranked completions from best to worst. The model learned to optimize toward what people liked, not just what was technically correct.

✎ This is how ChatGPT learned to be helpful, harmless, and honest—by absorbing subjective preference signals, not just right/wrong answers.

🔍 2. Google Search Rankings & Search Quality Raters

Search quality teams evaluate side-by-side results and pick which one better matches the intent of the query. These preferences train ranking models (like RankNet, LambdaMART, etc.) to serve better search results.

🎵 3. Spotify Recommendations

Spotify often collects implicit pairwise data—you skipped track A but played track B all the way through. That preference signal helps refine your “Discover Weekly” and radio-style recommendations.

🧠 4. Anthropic’s Constitutional AI

When fine-tuning Claude, Anthropic uses AI-generated outputs that are then ranked by other AIs or humans. These comparisons help Claude “align” better to human values—without relying solely on hardcoded rules.

📱 5. TikTok’s For You Page

While not always explicitly framed as pairwise, TikTok’s algorithm learns preferences from implicit comparisons: You scrolled past video A in 0.3 seconds, but watched video B three times. Those signals build a ranked attention profile.

Why This Works

It’s data-efficient

It captures subjective nuance

It avoids needing a “perfect” label—just a better one

Why This Matters for Marketing

In creative workflows, specificity is everything. Stock-style renderings and vague aesthetics are easy to spot. But a custom-trained visual model that knows you love dusty jewel tones and retro cereal box composition? That can shortcut hours of iteration.

For solo marketers, small teams, or anyone building brand IP, your Midjourney profile becomes an unspoken art director. And it’s trained in less than 10 minutes.

200 choices. Infinite impact.

tl;dr: Midjourney Profile Training

You vote on 200 image pairs

Midjourney builds a latent preference vector

You get a profile code to bias outputs (--p abc123)

This steers generations toward your style

It’s reinforcement learning, but make it ✨vibes✨.

And yes, I’m using it on muffins.